Domain Specificity in Machine Learning

A break from the essay-style writing and a return to posting, this article explores domain-specific machine learning, an example from the semiconductor industry, and its ethical implications.

The no free lunch (NFL) theorem of Wolper and Macready [1,2] in the field of optimization states that there is no best general-purpose learning algorithm because, when averaged over all possible learning tasks, the performance difference between any two algorithms is negligible. In other words, for a particular learning task, the algorithm at hand must be tailored to the specific task for maximum performance in the given task [3]. Applying a context-specific algorithm to problems outside of its given task would, inherently, result in a subpar optimization, still complying with the NFL theorem. However, to gain the most novel information from a learning task, it is in the user’s best interest to optimize the algorithm for that specific task. This hyper-optimization can be done with domain-specific knowledge, and is where domain-specific machine learning (DSML) comes into play.

The “domain specificity” of DSML could naively be thought of as applying machine learning (ML) to domain-specific data. This line of thinking can be furthered to simply view ML as a trial and error process [3] to achieve a particular outcome. Instead, integrating domain-specific knowledge into the structure of the algorithm can be achieved by viewing the DSML task as searching through a hypothesis, H, or data generation, G, space, rather than optimizing the decision making process, without supervision. This framework is what makes DSML “fundamentally different” from traditional ML: DSML is attempting to attain new generation and hypothesis spaces, G′ and H′ respectively, while ML attempts to accurately model a generation space given a hypothesis space via an optimized learning algorithm A [4].

The fact that the DSML framework actively does not describe this process as an optimization makes it appealing to a wider variety of learning problems, particularly unsupervised learning, when the target for the learning model is not defined. However, a DSML still needs a clearly defined goal for the learning process to converge; not only will the definition of these end-goals need to be informed by domain-specific knowledge, but their completion will as well. As we will see in our example, providing domain-specific objectives for the learning process will inform the structure of the learning algorithm, and will provide an iterative understanding of the data, instead of a singular, strict decision, leading to a more transparent and less underspecified learning process.

From this DSML view, the results of this process can generate new data or a better understanding of existing data for the user [3,4], informing the decision of the user, instead of the algorithm making the decision itself. Of course, in this search, the iterative learning process must provide the user novel information to demonstrate its value-add, summarized in Postulate 1 from [3]. This is an iterative process, “searching for a hypothesis space assumption in view of a data generator that can change from one iteration to the next” [4]. At each iteration, the user can provide domain-specific knowledge to guide the learning process, which in turn informs the user’s understanding of the data by providing novel information at each iteration [3,4].

…providing domain-specific objectives for the learning process will inform the structure of the learning algorithm, and will provide an iterative understanding of the data…leading to a more transparent and less underspecified learning process

A variant of the NFL theorem can be seen within each iteration, dubbed local no free lunch (LNFL). This variant of the NFL theorem arises because the hypothesis space, H, and data generation space, G, change between iterations i and i + 1, updating the learning task as well [4]. A consequence of the LNFL theorem is that any decision made by the learning algorithm could be antithetical to the user’s end-goal, which is to better update H and G, and as such the algorithm should refrain from making any decisions for the user. Instead, the DSML learning process should “focus on how to summarize the data from the current iteration i, to assist the expert’s decision making towards establishing i + 1” [4]. The core capabilities of a DSML process, focusing on the dynamic H and G spaces, can be summarized by these four requirements, described as a co-ML, or complimentary ML, approach [4]:

The capability to evaluate coverage of the samples on the hypothesis space

The capability to perform an exhaustive search of a user-defined hypothesis space

to get a “no models found” result

The capability to evaluate a given model’s applicability for the current data.

The capability to perform an unsupervised sample-based analysis.

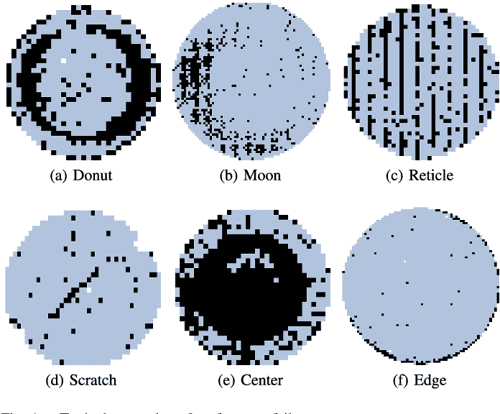

These aspects of DSML can be seen in the main example provided by [3,4], which follows pattern recognition in silicon wafer maps for semiconductor design and test (D & T).

The example chosen for these dissertations [3, 4] tangibly demonstrates the domain- informed search within a hypothesis space that DSML must fulfill. Traditionally, the identification of failed die patterns on a diced silicon wafer (Wafer Map Pattern Recognition or WMPR) was treated as a multi-class classification task. However, determining the number of classes is, itself, part of the learning task. The answer to this task may not have a specified “correct” answer, and therefore needs to be informed by the domain experts that are assessing the wafer maps. The lack of a definite learning end-goal means that this task cannot be framed as an optimization problem, and the convergence of the learning algorithm must be informed by domain-specific knowledge. In the case of WMPR, pattern maps are clustered together into lower-bound and upper-bound groups.

Instead of having one learning algorithm A optimize a model to produce a definitive outcome, a user can identify the best outcome of their particular task from the understanding that the learning algorithm has provided, over an iterative process. For WMPR, a lower-bound clustering algorithm, L, makes use of Tucker decomposition, a high-dimensional form of Singular Value Decomposition, to capture features of wafer maps along different dimensions, and Generative-Adversarial Networks (GANs) to determine the separability of each training iteration [4]. The upper-bound cluster algorithm, U, defines a variational autoencoder that is able to recognize patterns on a sample-by-sample basis. These algorithms together set the bounds of N (lower-bound) clusters of N samples or classifying all N samples with one class (upper-bound). There is also the possibility of a pattern map not matching any of the previously identified patterns, a “no model found” scenario. DSML also accounts for this by allowing the user to modify the hypothesis space in a way that is most informative [4]. Notice that, with the combination of these algorithms, the user can update the pattern classes with each learning process output, and, ultimately come to a decision that is best for the user’s case, not a generic decision given by the algorithm. Also, by providing lower- and upper-bounds of potential clusters, the algorithm is not giving a decision to the user, but instead providing the user a better understanding of the hypothesis space, which can be updated in subsequent iterations by adjusting network architecture or clusterings. Perhaps the most clear consequence of DSML is transparency of the learning algorithm; because the user must provide relevant domain-specific knowledge, the learning process must be transparent. If the learning process is a black-box, the user is not able to update the hypothesis or data generation spaces in later iterations of the framework. The black-box approach is also diametric to the goal of DSML, which is not to optimize an objective from but rather to provide understanding of data to the user. A summary of this iterative workflow can be seen in Figure 8.4 of [4].

The understanding of the data that the user gains from a DSML workflow can also inform the user of the under-specification present in an algorithm’s learning process. Underspecification is a phenomenon in ML where there are multiple, equivalent strong outcomes an algorithm can converge to, even if the model and training data does not change. [5]. A potential solution to underspecification is ”training models with credible inductive biases” [5], including domain-specific knowledge (inductive biases) into the training of the model. However, the authors caution against trading over-regularization for the flexibility of the model, and suggest including regularization that is relevant to the learning task to “incorporat[e] domain expertise without compromising the powerful prediction abilities of modern ML models” [5]. Underspecification is addressed within the DSML framework by shifting the focus from finding an objective optimization to informing the user of under-specification in the dataset [3]. The example of outlier analysis in WMPR demonstrates that assessing a singular customer return as an outlier against limited data demonstrates underspecification. When compared to a larger, but limited, dataset, the customer re- turn die, and another, separate die that performed similarly, did not appear to be outliers, going against the data that the user knows to be true and revealing underspecification in the larger dataset. In order to combat this, the user, informed by the DSML framework, can create a probabilistic model of the dataset to extrapolate an outlier threshold to 10 parts per million (PPM) [3], which would more accurately capture the nuance seen in a larger dataset as opposed to one wafer.

The understanding that comes from addressing both transparency and underspecification empowers the user of a DSML framework to make a decision that is best suited for their use-case, instead of allowing the algorithm to blindly lead them. Going back to the basic theorem that supports DSML, an implication of the NFL theorem is that “for DSML contexts, the learning algorithm A should not make final decisions for the experts” [4]. The explainability and generalizability of the DSML model inherent in its iterative, domain-informed approach naturally means that not only should not be used to make definitive decisions, the model cannot because of the nature of the information that the DSML framework provides. It does not provide binary classifications or definite regression values, rather, its aim is to provide an understanding of the data to the user that is, simultaneously, user-informed. This understanding extends to not only in the data generation space, G, of the types of data used, but also in the hypothesis space, H, of possible answers that could fit the data. The user can then update both of these spaces, and the type and structure of learning algorithm itself (which should have already been tuned to the learning task at hand to prevent underspecification from inconsequential changes) to produce a more informed view of the data at hand. The decision-empowering, rather than decision-making, nature of DSML intrinsically produces a more ethical algorithmic framework than black-box approaches.

Empowering users, such as clinicians, with information rather than decisions, and evaluating the autonomous vs. assistive nature of algorithms are two key considerations in identifying ethical implications for ML applications in healthcare [6]. These domain-specific ML approaches helps clinicians avoid “‘catastrophic failures’ and implicit biases in the training sets [that] black box, neural-network based systems are vulnerable to” [6]. Providing information about the underspecification present in a learning outcome would encourage the clinical user to update the data or hypothesis space to provide results more applicable to their particular patient case, without losing the generalizability of the learning process.

[1] D. H. Wolpert, “The lack of a priori distinctions between learning algorithms,” Neural Computation, vol. 8, no. 7, pp. 1341–1390, 1996.

[2] D. Wolpert and W. Macready, “No free lunch theorems for optimization,” IEEE Transactions on Evo- lutionary Computation, vol. 1, no. 1, pp. 67–82, 1997.

[3] M. Nero, Domain-Specific Machine Learning - A No-Free-Lunch Perspective. PhD thesis, University of California, Santa Barbara, 2022.

[4] C. Shan, Domain-Specific Machine Learning - A Human Learning Perspective. PhD thesis, University of California, Santa Barbara, 2022.

[5] A. D’Amour, K. Heller, D. Moldovan, B. Adlam, B. Alipanahi, A. Beutel, C. Chen, J. Deaton, J. Eisen- stein, M. D. Hoffman, F. Hormozdiari, N. Houlsby, S. Hou, G. Jerfel, A. Karthikesalingam, M. Lucic, Y. Ma, C. McLean, D. Mincu, A. Mitani, A. Montanari, Z. Nado, V. Natarajan, C. Nielson, T. F. Osborne, R. Raman, K. Ramasamy, R. Sayres, J. Schrouff, M. Seneviratne, S. Sequeira, H. Suresh, V. Veitch, M. Vladymyrov, X. Wang, K. Webster, S. Yadlowsky, T. Yun, X. Zhai, and D. Sculley, “Underspecification presents challenges for credibility in modern machine learning.”

[6] D. S. Char, M. D. Abrámoff, and C. Feudtner, “Identifying ethical considerations for machine learning healthcare applications,” American Journal of Bioethetics, vol. 20, no. 11, pp. 7–17, 2020.