It's ChatGPT's World, We're Just Living In It

Why are LLMs like ChatGPT so groundbreaking? Why has this been the type of machine learning to catch fire and why now?

We are living in an AI-focused (if not AI-forward) world. This is unavoidable and undeniable. While the underlying principles of this field have been around for at least a hundred years (shoutout to Alan Turing), this is the first time we are living in a face-to-screen, everyday-integrated AI society. How did we get here though? And why is this AI boom happening now?

We have been seeing the effects of AI in our lives for quite some time, quietly and dutifully operating in the background, as autocomplete behind text messages and recommender systems for determining a Friday night reality TV show binge1. However, we now have an algorithm that isn’t packaged into some API on top of an API. We can directly interact with ChatGPT and it has undoubtedly (whether you like it or not) changed the way we do search. A pillar of the internet formally unconquerably dominated by Google, ChatGPT has entered the arena to shake things up and redefine what it means to search within the vast repository of the collective human knowledge. OpenAI’s ChatGPT was the first algorithm to “break the internet” (to use an incredibly dated, millennial phrase).

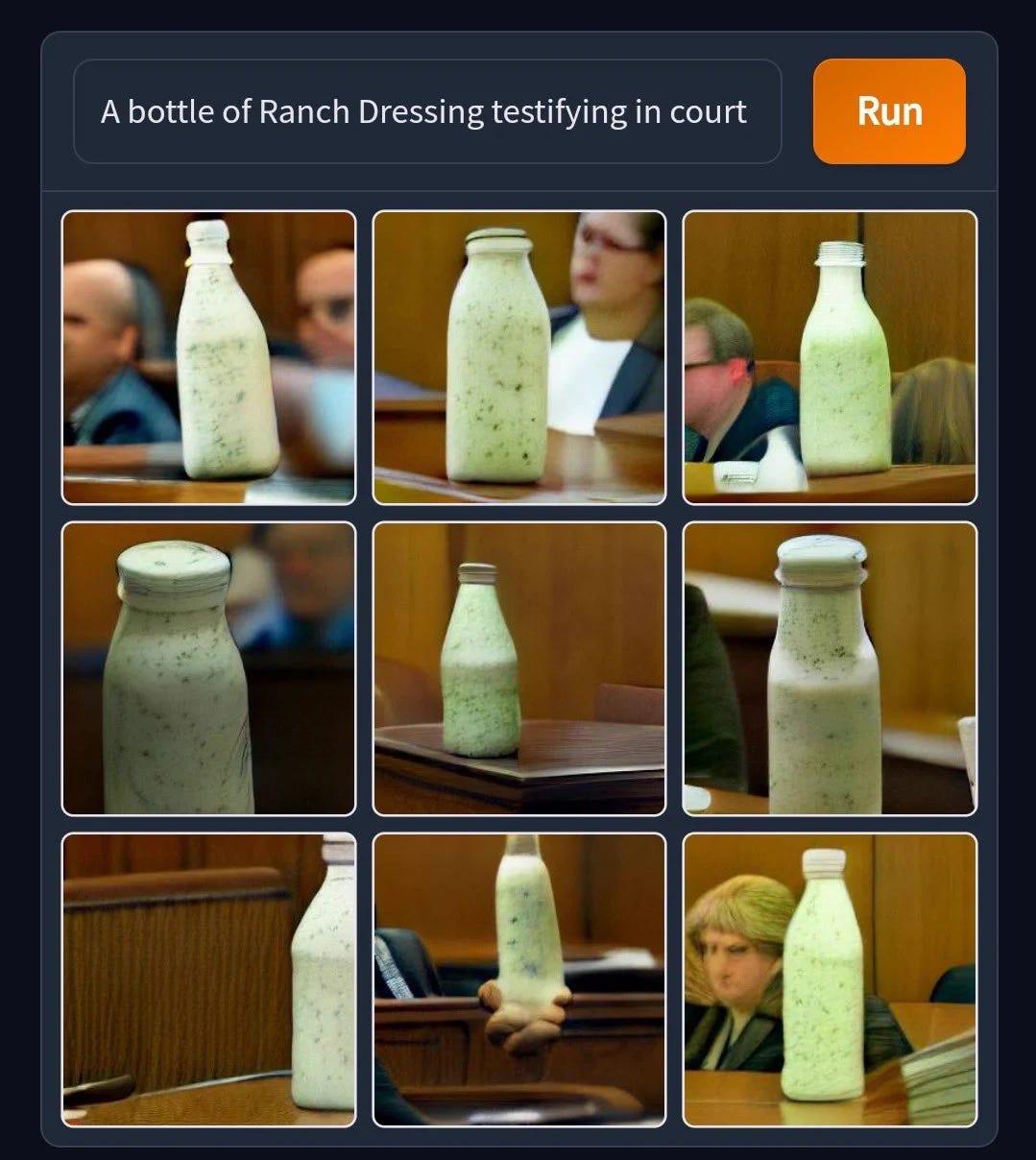

However, you could argue that this isn’t the first time we’ve seen an AI like this catch fire. Before ChatGPT, there was Dall-E, OpenAI’s generative image algorithm to create works of art (a separate conversation all its own) from your wildest, undreamt dreams. Arguably though, Dall-E was not as pervasive as ChatGPT was. There were several months earlier this year where anywhere you turned people we talking about and talking to ChatGPT. Dall-E, at most, seemed to be a passing curiosity for people to play with, study, and create with. Now, the AI-powered generative art scene is definitely something that has been on the rise in the last couple of years, powered by advances like Dall-E, but I’m interested in why an algorithm like ChatGPT gets integrated into workplaces, homes, even research centers, in 2023 while Dall-E, powered by fundamentally similar technology (transformer networks), doesn’t reach nearly the same level of notoriety. Other algorithms, like IBM’s Watson and Google’s AlphaZero, had their time in the sun, but ultimately faded into obscurity, shadowed by the ubiquity of ChatGPT.

I think there are several ways to attack this question: there is the timing itself and the timing of these algorithms relative to each other; there is the nature of the algorithms — one being visual the other verbal; there is the political and economic climate in which these algorithms were released along with a myriad of other factors.

Before diving into this line of inquiry, I want to clarify that I am using ChatGPT as a synecdoche for LLMs as a whole. While ChatGPT clearly pulled away as the main contender for conversational AI, many other versions and applications (see: here, here, and here) popped up soon after. And now, like a AI-version space race, these algorithms are getting stronger and stronger every day. And they are getting noticed like no algorithm has gotten noticed before.

Researchers used to toil away in labs, with little to no interest in the magic that their algorithm was spinning, save for a niche interest group here or there. Now, when I tell people that I do machine learning research, their ears perk up. People have a connection to AI; it’s not some esoteric concept. If you were to pick someone off the street, they would likely share their own, personal definition of what machine learning and AI are.

But 5, 10, 20 years ago, that would not have been the case. I think part of why ChatGPT and other LLMs have reached celebrity status and staying power is the fact that they were launched in a post-2020 world. Would ChatGPT have had just as much success in a pre- or non-pandemic world? I’m not sure. But what I do know is that we have redefined what it means to learn in a traditional academic environment.

As a third-year graduate student finishing up classes, I saw first-hand how abruptly learning had to pivot and the whiplash it gave students. We had to learn how to learn in this new, mainly virtual, world. And when some of these virtual learning practices stuck around, ChatGPT clicked into place. We were already so used to being face-to-screen for the past 3 years that perhaps having a conversation with an AI — something that wasn’t so unfeeling as a search bar but perhaps not as engaging as a wine night FaceTime with your best friends — was refreshing. Having these types of conversations with AI’s isn’t new to us — we have Siri, Alexa, and even Cortana.

But it’s different asking Alexa to put something on your grocery list for you rather than asking ChatGPT a question that you don’t know the answer to. Even asking Siri to do a search — you know Siri isn’t tapping her (it’s?) own personal knowledge repository and using critical thinking to answer your question. No, rather you tell (or ask, if you’re polite to your AI assistants like I am in the hope that they will spare you in the AI uprising2 ) this disembodied voice to execute a task. You don’t really have a conversation with it. (Side note: the anthropomorphizing of AI assistants — especially female ones as they usually are — is a whole different, but tangential and equally interesting, space to learn about and explore).

Now, I know ChatGPT is isn’t really having a conversation with you either. It’s not using critical thinking and engaging with you exactly like a human would. However, it does a damn good job of mimicking it. And that’s one of the benefits I’ve heard of ChatGPT — that, yes, you could browse through potentially ones (gasp!) of Google pages to get to your result that you would have to parse into your own context. Or you could have an LLM pre-chew it for you. And it seems a little less lonely to have your search results personalized with exclamation marks and gentle instructions rather than the stark white search page we’ve gotten used to, especially when we’re adjusting back into a society when human interaction is no longer as much of a limited resource.

Which leads me to my second exploration — ChatGPT and Dall-E have fundamentally similar technology but different outputs and I believe that’s why one skyrocketed to fame while the other, well not so much. Accessibility is huge when it comes to science in general and especially with a topic as complicated as machine learning. Not everyone has the expertise, intuition, and/or interest to digitally create a piece of art, but everyone communicates with language. I think part of the reason ChatGPT, and LLMs in general, took off so much more than other types of algorithms is because it touched at a basic cornerstone of our society and existence as humans — language. Dall-E is also, ultimately, a language-based model. Image GPT, the core of the Dall-E models, is a generative pre-trained transformer (GPT) model. Of course, you interact with Dall-E through language, but the overall interaction is a one-shot, or not as easily iterated on as interacting with ChatGPT. You prompt the algorithm with a piece of text and it generates an image based on that text. Now you could have these one-and-done interactions with ChatGPT as well, but there’s something about having a conversation (or at least the facsimile of one) that spurs on ideas and encourages people to dig deeper and continue the interaction. I think that’s exactly why we are seeing the first AI household name — while other algorithms like Watson are seen as a curiosity, LLMs further our curiosity in an organic and accessible way.

Finally, this interest in AI is seeping, slowly but surely, into the buildings on the Hill. It’s clear that our legislature lags far behind the pace of invention, but politicians are starting to notice the interest society as a whole is taking in AI. New policies like the CHIPs act aim to encourage American innovation in these areas. Politicians are listening to people like Sam Altman, Gary Marcus, and other experts and heavy-hitters in the AI scene.

I think this is not necessarily causing, but symptomatic of a feedback loop — AI is seeing more developments, people are paying attention, and CEOs/politicians want to drive progress (and make money while doing it, let’s #BeReal here), which causes more developments, etc. etc. It’s that second step though that is really interesting — people are paying attention. Of course, scientific progress has been marching on for years but, again, people can engage with an AI from the comfort of their own laptop. AI is being marketed to the masses and I think this will shape the future of the research field and the resulting tech. Because as we’ve seen with every other major technological advancement, you can’t put it back in the box.

If you’re wondering, Love Island UK is my binge of choice thanks to the Hulu recommender system.

For legal purposes this is a joke — but I really do say “please” and “thank you” to my Siri for the bit.