Physics and Fine-tuning

"Tuning" into the study of the fundamentals of our universe to analyze general machine learning.

The hallucinations of ChatGPT and other LLMs have become infamous. Something that I’ve noticed is a lot of these hallucinations, testing the boundaries of ChatGPT’s “knowledge,” occur when the question being asked is too niche or specific. For example, ChatGPT is great at collecting primary sources on fundamental physics discoveries, but ask it about the source of a specific aspect of the theory of supersymmetry and it will confidently produce nonexistent papers.

One way to avoid this type of hallucination is through a process called “fine-tuning.” For ChatGPT, this is where the user takes an instance of the general transformer-based algorithm (any of the original GPT-3 base models) and trains it with specific prompts and examples pertaining to the task at hand. According to OpenAI, this type of training leads to lower latency requests (because you don’t have to provide examples with the prompts), saved tokens (due to shorter prompts), and, ultimately, higher quality completions (aka results). Fine-tuning seems like not a bad way to get specific with your LLM and avoid some of the issues that can crop up.

There is another instance of this “fine-tuning” process and that is in theoretical particle physics. While particle physics and ML transformers may not have anything in common, I believe that examining fine-tuning in physics can help develop our intuition for fine-tuning in machine learning (and, perhaps, vice versa).

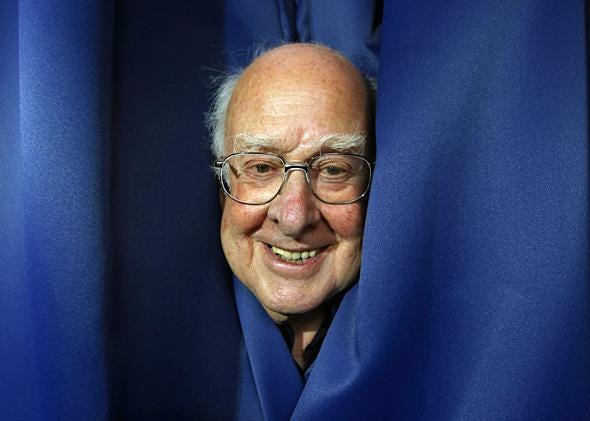

The fundamental theory of the observable universe can be summed up in a relatively neat (with some consolidating notation) and elegant equation known as the Standard Model (SM). It includes fundamental forces (electromagnetic, strong, weak), matter (like you and me), and even how matter gets mass (shout out to Peter Higgs and Francois Englert)1.

What does this have to do with ChatGPT? I’m getting there I promise. But first, back to the Standard Model — it’s a very powerful equation that describes much of our universe to an unprecedented degree…but it’s not perfect, or rather, all-encompassing. There are many aspects of our universe that are not described by the Standard Model (like gravity, dark matter, matter-antimatter asymmetry, etc.). One of these outstanding matters is a mathematical inconsistency known as the hierarchy problem. This problem appears actually because of the most recent confirmed discovery: the Higgs particle (and its corresponding field). Throughout the Standard Model there are already corrections that need to be made due to quantum effects (for mathematicians: think of these terms as non-leading order terms in a Taylor series expansion; non-mathematicians: see below*).

However, with the introduction of the Higgs field, the respective quantum correction to its mass is proportional to the ultraviolet cut-off scale parameter2 (which delineates the maximal allowed energy or shortest allowed distance), squared. If we take this parameter to be equal to the Planck mass (not an unreasonable assumption), this means that these tiny quantum corrections are suddenly on the order of 10 to the power 19. Oh sorry, squared so 10 to the power 38! That’s more than the number of stars in the universe (10 to the power 22), by a lot, without anything to cancel it out.3

*Translated: let’s say you want to buy a new pair of shoes. And you have a coupon — great! These “quantum corrections” are like the tax, usually something that is a lot less than the cost of the shoes (or in this case, the mass of a particle). In fact, your coupon is for 10%, just enough to exactly cancel out the tax! But, in the hierarchy problem, the tax isn’t a fraction of the cost of the product4. Instead, the tax is proportional to the net worth of Jeff Bezos times 10 million dollars — squared. I don’t think your coupon is going to cover that…

Clearly these ginormous second-order effects are an issue. And, under our current model, physicists have dealt with this in the most elegant, sophisticated way possible: they fudge the numbers. Okay, it’s a bit more complicated than that but this is called fine-tuning (sound familiar?), where you have an inconsistency in your model so you adjust the parameters accordingly. Think of it like when you’re taking a math test and your answer for x is not listed in the multiple choice options. So you go back, smudge the 3 into a 2, and pick the closest answer listed. Clearly, something is not right.

The need for fine-tuning in the current underlying theory of our universe is a very strong indication that the Standard Model is not the wholistic theory we’ve been searching for. The need for fine-tuning is indicative of the fact that there is some information we’re not capturing, a missing piece of the puzzle, a more basic understanding, underlying the underpinnings. Now this is where I could launch into auxiliary theories that have been proposed to address issues like fine tuning (supersymmetry!) but I won’t. Instead, I’ll finally bring it back to ChatGPT.

If fine-tuning in particle physics is indicative of an underlying issue, maybe it is in general machine learning too. Instead of attempting to carefully adjust the parameters of your model with hand-picked training prompts and examples, why not just change the model? And change the model in a smart and efficient way. Change the model to a model that knows the information it’s supposed to. A model that has that missing puzzle piece. In physics, we call the most basic (most fundamental, not necessarily simple) concepts “first principles” (like: conservation of energy or those symmetries I talked about earlier). These first principles have guided and are guiding us to a deeper understanding of our universe. Getting lost in the details and complications of the universe is easy, just like getting lost in the mathematical inner workings of an LLM. But, if we let our first principles guide us, we could come to a deeper understanding of our algorithm, leading to more insightful results and more compact descriptions.

What are the machine learning first principles we should employ? Well, that’s up to you, dear user. What is the problem you’re trying to tackle with machine learning? How can domain-specific knowledge inform the design of your algorithm, the collection of your data, and the workflow of the process? You may find that incorporating the insertion of domain-specific knowledge in the form of, for example, user intervention with the algorithm’s learning process may even lead to more interpretable results, a more transparent model, and, ultimately, more ethical AI. If your algorithm is smarter from these first principles, it doesn’t have to spend time learning concepts we already know. It can spend more time elucidating more interesting patterns in the data, ideally leading to stronger performances. Instead of having models with 1+ trillion parameters in an attempt to capture, well, everything, use the fundamental understanding related to the task at hand to consolidate some of those parameters in a meaningful way, hopefully leading to a more efficient and compact model.

Fine-tuning should not be the accepted norm in machine learning. It’s not in physics, and that’s why (or one of the reasons) we continue to analyze our universe, seeking more understanding. We should do the same with our machine learning models. Just as we’ve learned lots in our search for deeper understanding, our algorithms might too.

An Aside on Symmetry

Th equation of the Standard Model and the math describing the theory exhibit properties known as symmetries — yes plural because there are more than one. Symmetries are not some unintuitive mathematical concept — it’s exactly what it sounds like when you look in a mirror. That is an example of reflection symmetry but there are other types (like rotational). And when we get into the subatomic realm, there are even more and these symmetries can get really weird (like time-reversal). Symmetries are very important in nature and their importance is reflected (pun intended) in the Standard Model.

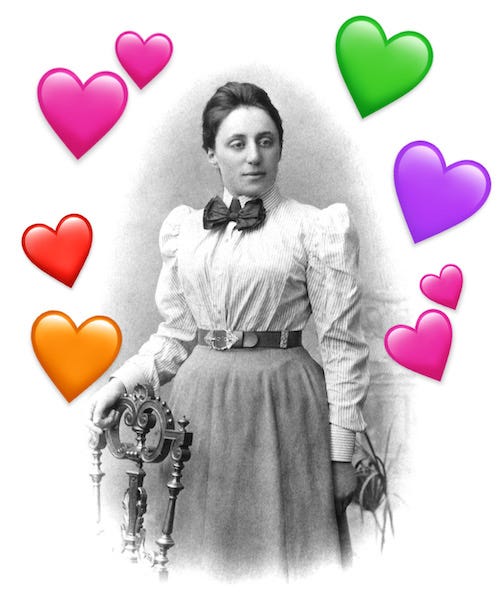

One of the reasons symmetries are so important is because whether they are respected or not (broken symmetries are a thing!) they give us clues as to how our physical laws in our universe should behave. When symmetries are respected, they are called invariant. Example: we know that translational (moving an object in one dimension) symmetry is respected in the Standard Model. This means that if you throw a ball, take a step to your right, and throw a ball again, the laws of physics should not change because you stepped to your right: the ball will still follow a parabolic path. (Aside: one of, in my opinion, the coolest equations in physics predicts that for every symmetry there is a corresponding conserved quantity. This is known as Noether’s Theorem and was proven by mathematician Emmy Noether in 1915. For translational symmetry, the conserved quantity is momentum, which is a fundamental law and one of the first things you learn about physics. So, if our universe was not translationally invariant, momentum would not be conserved and our world would look and act very differently.)

This doesn’t happen for the other particles in the Standard Model because only the Higgs mass is sensitive to the square of this cut-off scale. However, at a certain (electroweak) energy scale, the masses of the SM particles are directly or indirectly sensitive to this cutoff scale because they get their mass from the Higgs field [1].

This is a bit of an oversimplification. The corrections are not based on the mass of the particle, but rather, the interaction strength of the particle (coupling constant) with the field at hand. This coupling constant is proportional to the mass of the particle (squared mass of the particle in the self-coupling case of the Higgs) [2].